Unlike standard single-sensor cameras, prism-based cameras use multiple sensors to create simultaneous images of multiple wavebands, using just one lens, while sharing the same optical path. These cameras separate incoming light into multiple channels, with pixel-precise alignment regardless of motion or viewing angle.

The role of the lens

When imaging with a camera, the lens is a crucial part of the system. It is used to focus light rays under specific angles onto the sensor thus creating a two-dimensional representation of the environment. Camera lenses typically consist of multiple lens elements with different materials, thicknesses, radii, coatings, etc., resulting in lenses with different fields-of-view, optimized for different cameras and different applications, in varied price ranges.

We often mention how important it is to choose the right lens for a prism-based camera. This means more than just choosing a lens with the right focal length, image circle, and having an aperture sufficient for the expected frame rate, depth of focus, and lighting conditions. It also means choosing one that is optimized for prism-based cameras by design. But what is the reason for that? Why shouldn’t you just use a standard lens, designed for single-sensor cameras which fits the sensor and your application?

How the prism affects the imaging

The reason comes from multiple facts, starting with the fact that between the rear element of the lens and the sensor, there is a thick glass component, the prism, which changes the optical path (based on the laws of refraction of light). Another reason is that these lenses are used over a broad wavelength range, from the shortwave visible wavelengths (i.e., blue) all the way to the near infrared wavelengths. Optimizing and manufacturing lenses for the full spectral range is a complicated and costly process and the challenge is growing as the technology advances and more and more cameras appear with an extended spectral sensitivity range covering spectra not only in the visible and near infrared, but also in the shortwave infrared waveband.

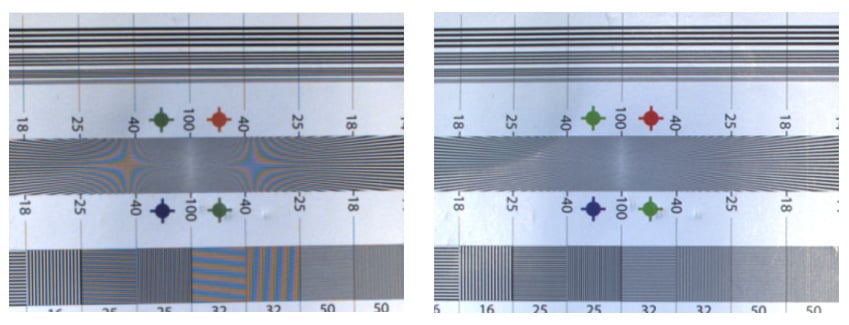

With the help of dichroic filters on its surfaces, a prism can separate light into different wavebands to ensure that each sensor is hit only by photons of a pre-filtered wavelength range. This results in much better color separation efficiency than what a Bayer-filter sensor could provide. Often using Bayer filters results in unwanted optical artifacts, such as false colors along edges or blurred patterns. Artifacts (moiré patterns) caused by Bayer interpolation (left) compared to image captured with multi-sensor prism camera (right)

Artifacts (moiré patterns) caused by Bayer interpolation (left) compared to image captured with multi-sensor prism camera (right)

Working all the angles

Another important consideration for prism camera lenses has to do with managing the angle of incidence for the light rays entering the prism block.

We know from the laws of light refraction that light rays bend towards or away from the normal while passing through a rarer to a denser medium or from a denser to a rarer medium. The behavior of light obeys this rule also when passing through the prism. The prism block consists of several layers, including dichroic filters. While these filters have an accurate wavelength separation with a steep transmittance boundary, they work based on optical interference and therefore, by nature, their behavior is angle-dependent. Meaning, that depending on their incident angles, light rays will be transmitted in different amounts. This can cause color shading effects that you can see in the image. The more varied the angles of incidence, the more visible the shading effects.

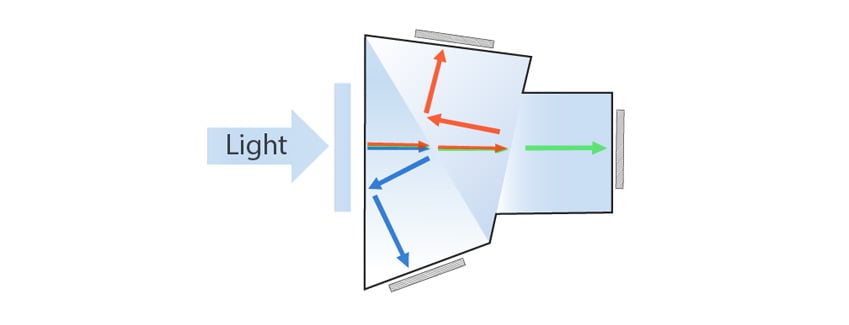

Separation of light through R-G-B prism using dichroic filters

Separation of light through R-G-B prism using dichroic filters

This is where lens pupils become important. The entrance and exit pupils of a lens are virtual "windows" that define how light rays enter and exit the lens. These virtual apertures, which can be seen when looking into the lens from the front or the back, play a key role in how light beams from different angles enter the lens, and how they travel towards the sensor after exiting the lens.

In order to minimize possible shading effects due to the angle-dependency of the dichroic filters on the internal surfaces of the prism, light rays coming from the lens must be as perpendicular to the prism surfaces as possible. This can be realized by virtually "moving" the exit pupil farther away from the prism during the optical design process.

As the exit pupil location does not play an important role in imaging with single-sensor cameras, standard lenses are not optimized to achieve specific ray angles. The added design complexity and additional/larger lens elements would increase the price – all without a significant benefit to the customer. But that means that if a standard lens is used on a prism camera, light rays will hit the prism surfaces at a relatively larger angle – thus some of them will not be transmitted, resulting in a local darkening of the image in the given waveband, effectively causing color gradients in the image.

Color gradients caused by the angle-dependency of the dichroic filters with a standard lens (left) and with a prism-specific lens (right)

Color gradients caused by the angle-dependency of the dichroic filters with a standard lens (left) and with a prism-specific lens (right)

As even the most advanced multi-sensor, prism-optimized lenses might cause slight color gradients, JAI has implemented an in-camera feature called shading correction to address residual discoloration. This compensation method is designed to produce a flat, equal response to light in all three color channels, provided the shading issues are within a reasonable range.

Focal plane considerations

But color shading is not the only image quality factor which needs to be addressed. Light rays also behave differently when passing through substance boundaries based on their wavelengths. This can create different focal distances for different spectral channels. If a non-optimized lens system is utilized, different wavebands might appear blurred or shifted, especially if the lens is used for imaging in both the visible and the near infrared (NIR) range.

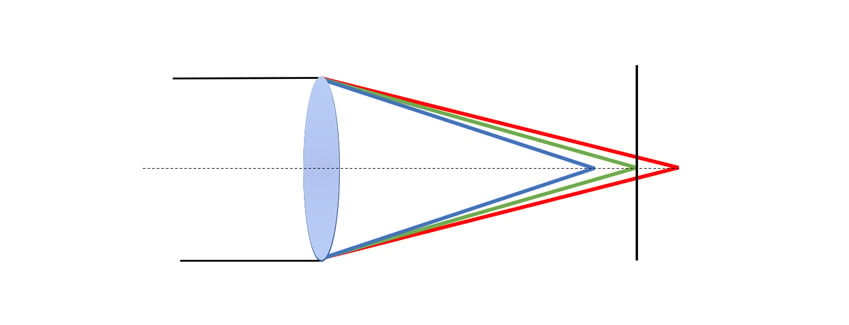

The phenomenon when the lens cannot focus different wavelengths in the same focal plane is called longitudinal chromatic aberration. If the amount of this aberration is high, it becomes impossible to achieve a sharp image in all channels of a multi-sensor camera such as JAI's Apex Series, Fusion Series and Fusion Flex-Eye cameras. Instead, either some of the visible channels or the NIR channel will be slightly out of focus.

Longitudinal chromatic aberration

Longitudinal chromatic aberration

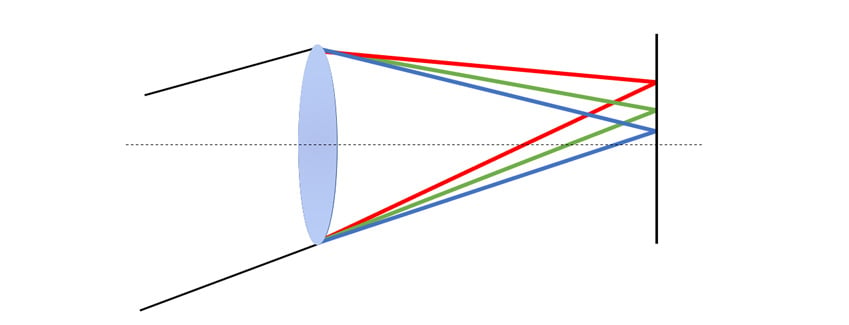

The other most crucial aberration is called lateral chromatic aberration. In this case, light rays with different wavelengths will appear at slightly shifted positions, causing fringes around objects. As the magnification is different for each waveband, the effect becomes more and more pronounced towards the edges of the image.

JAI has worked hard to ensure that the sensors in all of our prism-based cameras are aligned with sub-pixel accuracy. It is highly beneficial, therefore, to select lenses which will not cause a lateral aberration between the channels that would negate the benefits of this precise sensor alignment. However, as opposed to longitudinal chromatic aberration, this phenomenon is, to some degree, compensable via software without a significant loss of details in the image. To learn more about which cameras supports this JAI-patented feature, please contact our experts.

Lateral chromatic aberration

Lateral chromatic aberration

Finding the right combination

The choice of a prism camera for a machine vision system typically indicates a desire - or a requirement - to achieve higher precision and higher overall image quality for a particular application. That is why it is so important to pair it with a lens optimized to provide an equally high level of sharpness from corner to corner across the entire image. JAI's prism-optimized lenses are designed to do just that - providing a steady MTF and balanced relative illumination over the whole sensor.

It is important to mention, however, that in the world of optics, a perfect lens does not exist. The amount of different aberrations can be lowered, but they cannot be completely eliminated. The final lens choice is usually a trade-off between the benefits and the requirements. The question becomes, "what is most important for the application?" Is it low color shading, high level of sharpness in all channels, pixel-precise image matching in all wavebands, or lower overall cost? Our experts will be happy to help you make the right choice.